The web (paradoxically similarly to a human being) is particularly fond of his own age, and much less of what happened before its birth. Historical events, news, documents and cultural artifacts at large from 1994 to nowadays are way easier to find than the ones antecedent the middle nineties, the earlier the worst, and the later the better. It seems that the web reflects more and more a structure like a river flow, always heading towards its mouth, more than remembering when and where its own water started to flow or even originated before that. Probably because online giants are aware of this cultural gap, they think that the final establishment of the web reputation as a trusted medium goes through the migration in the online form of traditional media, and this means by letting people access what they used to trust more: printed stuff. But digitalizing printed sources is a big task, a massive effort in trying to archive printed content and make them available online. It could be felt as the final passage from the printed to the digital form but actually we should ask ourselves: can this be properly defined as »archiving«? I will try to answer to this question later, but I would like to quote what James Rifkin wrote in his book »The age of access«: »The physical container becomes secondary to the unique services contained in it... Books and journals on library shelves are giving precedence to access to services via the Internet.« We still have unparalleled cultural resources in old media formats. Billions of books and magazines for examples are still readable, despite, in some cases they are a few centuries old. But their access is complicated, unless you are in the same physical place. On the other hand, we have global networks, indexed networks that can be searched through private search engines. But as stated they are mainly fond of the last two decades, and there is only a small fraction of what is physically available. Google thought this is a problem for humankind. So Nikesh Arora, president of Google\'s Global Sales Operations and Business Development, confessed that Google founders\' dream is »the creation of a universal library.« More than a dream it is a giant business opportunity, selling ads that will be displayed while reading the book of choice selected from an immense online library, in the classic benevolent and fatherly Google style, but this is another story. What Google is trying to do is to digitize (and possibly get rights to) huge chunks of cultural printed matters. With five million dollars as initial investment they can claim 7 millions books scanned, with 1 million already available in full preview. Most of the books are scanned using a special camera at a rate of 1.000 pages per hour. This approach has two sensitive problems. First: the access to this enormous body of culture is controlled and regulated by Google. It is not Unesco, it is Google. Second: their aim tends to be to acquire the most »universal« type of culture in order to be as popular as possible. Then what about the rest?

Ubuweb and Aaaaarg.org

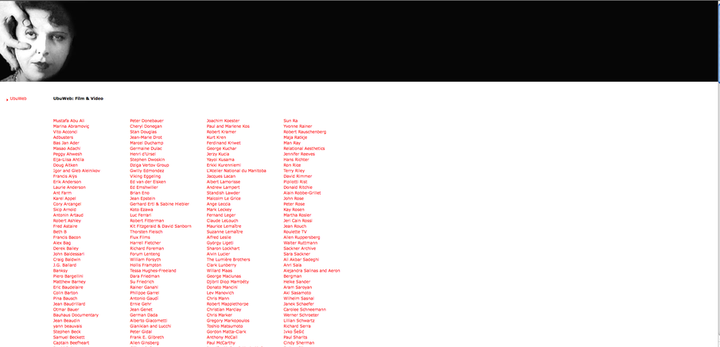

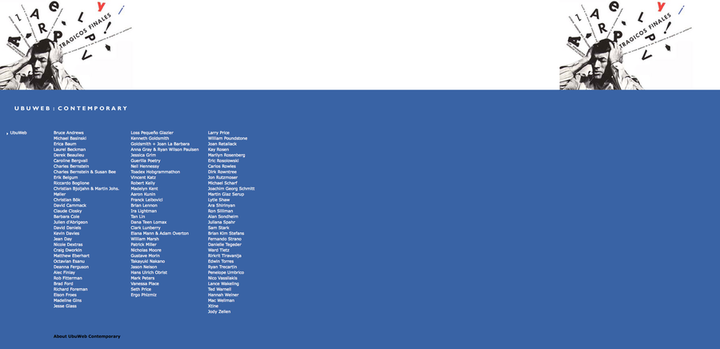

Talking about independent digital archives there are already excellent efforts. Ubuweb, for example, is a curated one, with curators filtering the precious material submitted by a community of enthusiasts. It embeds the virtues of being focused on a topic but also being perceived as a reference by a community that contributes actively »donating« precious digitalizations of rare materials (as an excellent library should aim to be). Aaaaarg.org was another one on the same wavelength: they are digitizing (and asking people to help digitize) hundreds of books and papers mainly related to academic research in art, media and politics. All the files were searchable as text and freely available for download through a registration and a few further technical steps. Their definition is kind of imaginative »AAAARG was created with the intention of developing critical discourse outside of an institutional framework. But rather than thinking of it like a new building, imagine scaffolding that attaches onto existing buildings and creates new architectures between them.« Are these processes »archiving«? The long lasting printed copies are still in the libraries, and only some physical accident can delete them. The digital copies can be deleted in a second, although, their spread can help in multiplying their access. So having multiple copies of the scanned files, spread in different places and in different computers would help in the end their collective memory, and so maybe help in parallel their preservation. And if you consider any »subculture« or literary, artistic, music movement you can easily individuate a few persons (journalists, historians, collectors, small institutions, obsessed fans) who have assembled in years impressive collections that just lie in one or a few rooms. These precious kinds of heritage are individually preserved but, in a word, they are invisible to the rest of the scene and the world. If they would be shared then their context would become a public resource, a common archive that would have important consequences on both the online presence of the publisher and the general historical perception. If Wikipedia is the biggest effort in sharing knowledge on the most general and cultural perspective, then single but networked online archives of printed content should make the difference.

Distributed Archives

In a way it is a similar mechanism to the so called »seeding« of P2P stuff. »Seeding«, in the P2P technical vocabulary means that if you want to »own« something you have to share it, at least a part of it. Building these archives of references, and scanning small productions would help sometimes to reconnect fragments that were lost over time, texts lent and never returned that would turn up again. But, we would ask ourselves: can we properly define these types of processes as »archiving«? I think we can not. All of the above is about »accessing«, and not »archiving« Only in a few decades we will know if your jpg and pdf files will still be there. But starting to take the responsibility to digitize them and share them is the first constructive step we can do. The resulting scenario can then be of archived »islands« of culture slowly and independently emerging in the internet and growing. They would be made by people who share passion and just want to share information and contribute to the access of important content that would be new for the web. It would be a shared memory as peer-to-peer has proved to be. It would be coupling the stability of our printed culture, and its being static, with the ephemerality of its digitalization, and even more its consequent dynamic characteristics. But such a project requires permanent »seeders« to stick with the peer-to-peer parallel, or people who want to be responsible. If we will be able to establish usable models, platforms and practices, we will probably help a small portion of culture to survive its own future.

http://aaaaarg.org/

http://www.ubu.com/